A car parts Manufacturing company Use case

1. Introduction & Business Goals

Back when I was still in engineering school, I had this internship at a small tech assistance company in the south of France. One day, we were called out to help a regional car parts manufacturer, which also doubled as a dealership. They had this entire professional setup—everything from designing custom DAO models to stock management, sales confirmation, and even packaging and delivery. Their system handled everything, including storing the DAO models for machine operations.

On that day, their server crashed. Our task was to back up the existing hard drives, install a new server rack, and replace some cables—a job that cost them several thousand euros. It was a pretty standard intervention.

But what really caught my attention was the software they were using. While the company was clearly professional, their ERP system was this outdated Java applet from the 2000s, and it looked ancient. I couldn’t help but wonder how much they were losing in efficiency and “quality of life” in user experience just to keep the data “safe.”

In my memory it looked something like that:

Fast forward a few years, and I was still thinking about that experience. If I wanted to help that company, how would I modernize the workflow while keeping the data secure, reducing costs, and avoiding downtime? Sure, you wouldn’t want to risk exposing sensitive designs and business data to some random server in the U.S., but what about the risks of local servers catching fire or files getting stolen internally?

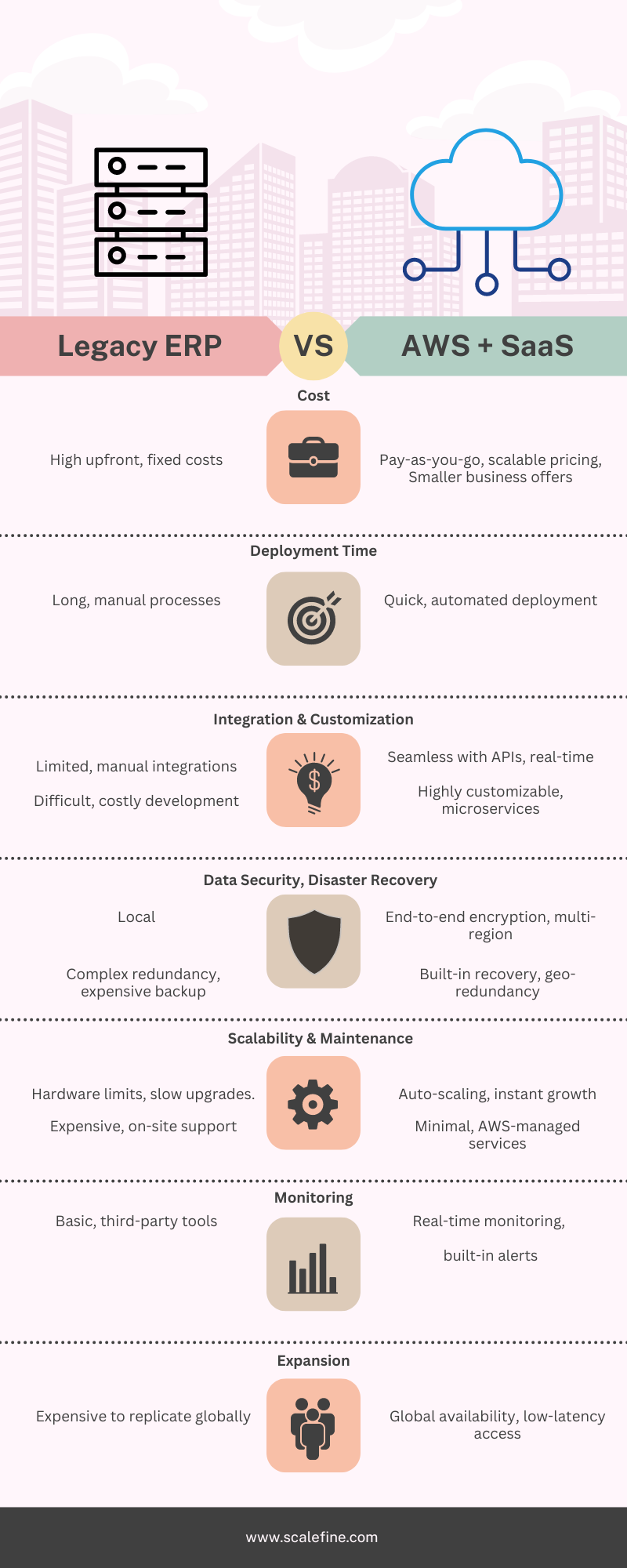

That’s when the concept of digital transformation with a combination of AWS and SaaS solutions started to make a lot more sense. SaaS (Software as a Service) refers to software solutions hosted on cloud computing platforms and provided on a subscription basis. Instead of installing software on individual devices, businesses can access tools like CRM (Customer Relationship Management) systems, financial platforms, and order management software directly via the internet. SaaS offers scalability, reduced maintenance, and seamless updates, all managed by the service provider—freeing businesses from the burden of managing complex applications themselves. By leveraging AWS’s cloud computing key enabling technologies alongside specialized SaaS providers, you can centralize operations, implement advanced cryptography, and secure your sensitive data—while also mitigating the risk of data leaks by splitting your data across different providers. This hybrid approach enables companies to distribute critical data across different platforms, making it harder for any single point of failure to compromise the entire security.

For example, sensitive DAO files and intellectual property can be stored using client-side encryption on AWS’s S3 storage, while customer-facing operations such as order management or CRM can be handled by SaaS platforms like Salesforce or HubSpot. Using this combination reduces reliance on a single provider, ensuring geographic redundancy and improved data privacy management across different jurisdictions, while drastically enhancing the user experience through digital transformation AI technologies and solutions at the top of their art.

2. Assessing Business Processes with AWS + SaaS

When embarking on a digital transformation journey, one of the first and most essential steps is to identify and map out all existing business processes, including both digital workflows and paper-based procedures. The goal is to ensure all elements are captured comprehensively, setting the foundation for process improvements, security measures, and automation.

As Paul Harmon notes in his book Business Process Change: A Business Process Management Guide for Managers and Process Professionals, “understanding how processes flow and where key bottlenecks exist is the foundation for all successful digital transformation initiatives.” This includes analyzing and documenting all workflows, both manual and automated, to identify areas where digital transformation AI or cloud computing could be introduced to enhance efficiency.

This holistic approach ensures that no process is overlooked and that the transition to digital is comprehensive and effective. By leveraging cloud computing key enabling technologies, the entire organization can align its processes to modern standards and build resilience through automation and centralized control.

For a more professional and well-rounded approach on how this listing is conducted, some frameworks are helpful for large organizations, usually driven by the CTO. Such frameworks can include TOGAF, ITIL, and Six Sigma, which provide best practices and guidance for conducting thorough business process analysis and ensuring an effective digital transformation.

- ITIL 4 (Information Technology Infrastructure Library)

- Focuses on aligning IT processes with business needs and ongoing process improvement.

- Six Sigma (DMAIC Framework)

- A structured, data-driven approach to improve processes, reduce variation, and eliminate inefficiencies.

- Lean (Lean Management)

- Helps streamline processes by eliminating waste and focusing on delivering value to the customer.

- COBIT 5 (Control Objectives for Information and Related Technologies)

- Ensures effective governance and management of enterprise IT, aligning processes with strategic goals.

- TOGAF – Business Architecture Phase

- Ensures that IT processes align with overall business architecture and strategic goals.

To start quicker, at a lower scale we can sum up the best tips contained in these when doing the processes and exsting workflows listing:

- Engage Key Stakeholders Early: Involve department heads and process owners from the start for better process identification and buy-in.

- Categorize and Prioritize: Focus on high-impact processes first—those that directly affect business outcomes.

- Use Simple Visualizations: Utilize tools like BPMN or Lean Value Stream Mapping to create easy-to-understand process flows.

- Collect Metrics and Data: Use Six Sigma principles to gather data on current process performance to guide decisions.

- Document and Review Continuously: Follow ITIL’s Continual Improvement practices to ensure processes are regularly reviewed and optimized.

List of existing processes

Let’s assume that after first round of interviews and pre-studies of the existing processes, we could synthesise these:

These business processes each bring unique added value, along with their respective bottlenecks and risks, which vary depending on the scale of the organization. This theoretical representation categorizes the processes into high-value and low-value. The high-value processes are those that contribute most significantly to the company’s core operations—optimizing them would directly impact revenue generation and business growth. On the other hand, lower-value processes, while still necessary, focus more on operational efficiency, and improvements would mainly reduce costs and losses.

For the sake of this introduction to digital transformation, let’s focus on the four highest value processes. These processes are crucial for applying cloud computing key enabling technologies to maximize impact, enhance workflow efficiency, and support long-term scalability.

- Managing DAO Schema Files

- Protects intellectual property and core designs that are central to manufacturing. Streamlining this process ensures secure collaboration, reduced errors, and efficient version control, directly impacting production timelines and product quality.

- Stocking and Delivery Preparation

- Efficient management of customer interactions and orders is essential for business growth. Real-time tracking enhances customer satisfaction and increases the potential for upselling and repeat business.

- Managing Customer Leads and Orders

- Efficient management of customer interactions and orders is essential for business growth. Real-time tracking enhances customer satisfaction and increases the potential for upselling and repeat business

- Calculating Reserve Stocks

- Accurate stock predictions lead to cost savings and better supply chain management. Avoiding overstock or stockouts ensures the company runs more efficiently and profitably

3. Critical Data in the Current System (Old ERP + Excel)

The company’s current processes depend heavily on an outdated mix of old ERP systems, Excel spreadsheets, and manual inputs. As a result, the way critical data is stored and managed introduces significant risks. Each process directly ties to specific types of critical data that are vulnerable due to manual handling and fragmented systems. Let’s take a closer look at the key processes and their associated critical data:

- DAO Schema Files

- Stored in: Local encrypted servers, Excel spreadsheets, or paper copies.

- Risk: High risk of data loss due to hardware failures or human error, version control issues from multiple copies, and lack of security measures, leading to potential leaks or unauthorized access.

- Stocking and Delivery Data

- Stored in: Excel spreadsheets, manually updated across departments, and sometimes on paper.

- Risk: Stock inaccuracies due to manual data entry, risk of inventory mismanagement, and communication delays leading to incorrect or delayed deliveries.

- Customer Leads and Orders

- Stored in: Old ERP system with (potentially) partially encrypted data, with additional tracking in Excel or Access databases.

- Risk: Fragmented data across systems, risk of data privacy breaches (if customer information is mismanaged), manual errors leading to order inaccuracies, and inefficiencies in tracking leads.

- Reserve Stock Calculations

- Stored in: Excel spreadsheets, based on manually input historical sales data.

- Risk: Frequent overstocking or stockouts due to outdated or incorrect data, manual miscalculations, and delayed decisions from lack of real-time insights.

4. Architecting AWS + SaaS Solutions

Choosing the Right Tools:

To properly assess and choose the right tools for digital transformation, again frameworks like COBIT 5 and TOGAF are highly effective. These frameworks offer methodologies for aligning IT strategies with business needs, risk management, and enterprise architecture planning. COBIT 5 – Evaluate, Direct, and Monitor (EDM) for example focuses on governance of IT investments and ensuring that IT services meet business needs, while TOGAF – Technology Architecture Phase (probably even more pertinent in this phase) helps choosing the right technology stack to support business operations.

In TOGAF, the study of technology stack choices is typically represented using several architectural artifacts, which provide a structured and layered view of the Technology Architecture phase. The goal is to ensure that the selected technology components support the overall business architecture. Here’s how it’s often represented:

1. Architecture Definition Document (ADD)

- This document provides an overview of the architecture decisions, including technology stack choices, and justifies why each technology was selected based on business goals, security, and compliance requirements.

2. Technology Architecture Model & Technology Reference Model (TRM)

- The Technology Architecture Model is visual diagram which captures the chosen technology components in relation to the company’s architecture. It typically includes representations of different technology domains like compute, storage, and integration.

- The TRM represents the technology components and services that support the architecture and explains how they interoperate. It maps the various technologies to platform services, middleware, and business services.

4. Capability Assessment Matrix

- This document evaluates the capability of each technology to meet business objectives, risk management, and compliance needs.

5. Gap Analysis (Architecture Roadmap)

- This artifact identifies gaps between the current technology stack and the desired future state.

As outcome of these studies, I will present 2 alternatives to cover these processes in our new approach. One using an Hybrid mix of AWS + various SaaS + Local server, then another one with AWS only so we can genuinely compare both. I’ll include a very quick glossary of what these technologies are at the end of this listing, so you can understand more how they relate to each business goal.

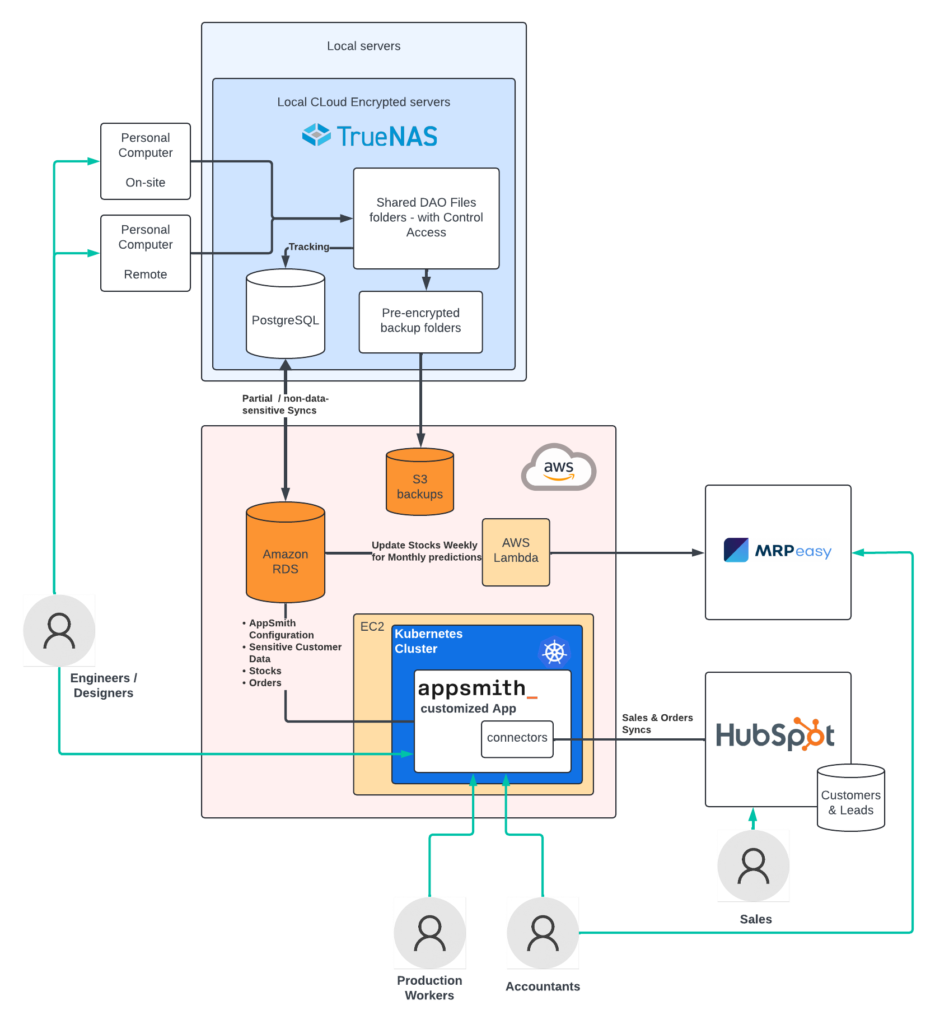

4.1. AWS/SaaS/Local Hybrid Approach

We prioritized the security of DAO files and designs, keeping them locally encrypted before syncing with the cloud, while remaining more flexible with customer data since government-grade threats are unlikely to target a regional business. This approach aligns with cloud computing key enabling technologies, which provide scalable security for digital transformation.

In the hybrid approach, we optimized scalability, efficiency, and integration for a regional car parts manufacturer. Here’s a summary:

- Appsmith: An open-source low-code tool for internal apps like CRM. Chosen for its direct HubSpot integration and cost-effectiveness. Appsmith Integration.

- AWS EC2: Handles scalable cloud computing for DAO processing, supporting digital workflows and efficient cloud infrastructure.

- AWS S3 & TrueNAS: TrueNAS keeps critical files locally secure, while AWS S3 provides encrypted cloud backups, balancing compliance and scalability.

- AWS RDS: A managed database for real-time data sync, facilitating digital transformation through cloud data management. More details about the database types can be found here

- Kubernetes (K8s): Manages microservices for seamless deployment, used for stock flow services within the cloud environment.

- AWS Lambda: Automates data synchronization between cloud and local components, ensuring efficient updates for stock and customer data, which aligns with digital transformation AI for smarter workflows.

- MRPeasy: Cloud-based stock planning software that provides real-time inventory tracking, especially valuable for SMEs utilizing key cloud technologies.

This hybrid solution provides real-time data insights, cost control, and data security, merging on-premise control with the scalability of cloud computing.

| Technology Stack Layer | Chosen Solution | Capability (Short Description) | Managing DAO Schema Files | Stocking and Delivery Prep | Managing Customer Leads and Orders | Predicting Reserve Stocks | Overall Cost (Monthly) |

|---|---|---|---|---|---|---|---|

| Server Layer | AWS EC2 | Secure and scalable hybrid storage | EC2 scales DAO processing | EC2 for running stocking & delivery app | – | – | $100-$200 |

| Storage Layer | AWS S3 + TrueNAS | Secure local and cloud backup | Encrypted DAO files synced from TrueNAS to S3 | TrueNAS for critical, S3 for less-sensitive backups | – | – | $50-$100 |

| Database Layer | AWS RDS + Local Database (TrueNAS) | Real-time sync with local backup | Metadata stored on RDS | RDS syncs stock data, backed up with TrueNAS | – | – | $150 |

| Security/Encryption Layer | AWS KMS + TrueNAS Encryption | Ensures compliance with local encryption | KMS encrypts uploads, TrueNAS secures locally | KMS encrypts data flow, TrueNAS for local data | – | – | $50 |

| Integration Layer | AWS Lambda + Kubernetes (K8s) | Simplified integration and container orchestration | Lambda syncs data across AWS services | K8s orchestrates microservices for stock flow | Lambda and K8s enable real-time CRM integration | Lambda syncs predictions between MRPeasy and AWS | $250 |

| Software Layer | AppSmith CRM Builder (Connectors) + Java mini-apps on EC2 | Custom DAO and stock applications with UI management | Java apps run DAO management + Appsmith UI | Proprietary stock and delivery software on EC2 + Appsmith UI | Appsmith provides CRM UI with connectors for AWS services | Custom connectors for MRPeasy integration | $200 (Support & Hosting) + Development Cost |

| SaaS Layer | MRPeasy | Stock management and automated sync | – | – | – | Automates reserve stock planning and tracking | €79 |

| SaaS Layer | HubSpot | CRM and lead management | – | – | Manages customer leads and sales pipeline | – | €200 |

4.2. AWS-Only Approach

In the AWS-only approach, all infrastructure and services are provided within the AWS cloud ecosystem. This approach is highly scalable and efficient, but storing sensitive DAO files solely on AWS can raise security concerns regarding protecting intellectual property, especially given AWS’s location in the US. Here’s how we managed the architecture with the selected technologies:

- Appsmith: Still used as an open-source low-code platform for building internal dashboards and interfaces, now applied more broadly since there’s no need for HubSpot integration. Appsmith Overview.

- AWS EC2: Provides virtual servers to handle all computing needs, from managing DAO files to running stock and delivery workflows, ensuring scalable processing across business operations.

- AWS S3: Offers scalable cloud storage for all data, including DAO files, customer data, and inventory backups. While secure with encryption, concerns over data sovereignty may arise for storing sensitive IP designs solely on the AWS cloud.

- AWS RDS (Relational Database Service): Used for real-time data management such as storing customer data and inventory information, ensuring seamless data centralization and accessibility.

- AWS SageMaker: A cloud-based machine learning platform that handles predictive analytics for stock management, maintaining optimal inventory levels in line with cloud computing key enabling technologies.

- Kubernetes (K8s): Deployed on AWS for managing microservices, ensuring consistent orchestration of containerized applications across various AWS services.

- AWS Glue: Serves as the data integration layer, connecting all AWS services, automating workflows, and enabling real-time data flow between systems, critical for cloud computing digital transformation.

This AWS-only solution integrates all infrastructure into a single cloud environment, minimizing on-premises setup but potentially introducing data sovereignty concerns for storing sensitive intellectual property.

| Technology Stack Layer | Chosen Solution | Capability (Short Description) | Managing DAO Schema Files | Stocking and Delivery Prep | Managing Customer Leads and Orders | Predicting Reserve Stocks | Overall Cost (Monthly) |

|---|---|---|---|---|---|---|---|

| Server Layer | AWS EC2 | High scalability for cloud computing | EC2 for DAO file processing | EC2 runs stock & delivery app | – | – | $100-$200 |

| Storage Layer | AWS S3 | Secure, scalable cloud backup | Stores DAO files, backed up securely | Stores stock and backup data | – | Stores stock data for analysis | $50-$100 |

| Database Layer | AWS RDS | Managed cloud database | Metadata stored in RDS | RDS for stock & delivery data | Stores customer information | Stores historical stock data | $150 |

| Security/Encryption Layer | AWS KMS | Managed encryption across AWS services | Encrypts DAO metadata on S3 | KMS secures stock data | KMS secures customer information | Encrypts SageMaker models | $50 |

| Integration Layer | AWS Glue + Kubernetes (K8s) | Container orchestration & integration | Glue syncs DAO data | K8s manages stock app microservices | Glue integrates data flow | Syncs predictive data with SageMaker | $250 |

| Software Layer | AppSmith CRM Builder (Connectors) + Java mini-apps on EC2 | Custom DAO and stock management | Java apps handle DAO operations | Manages stock & delivery workflows | UI for CRM data management | Integrates SageMaker outputs | $200 (Support & Hosting) + Development Cost |

4.3 Comparing both solutions

Hybrid approach:

| Technology Stack Layer | Overall Monthly Cost | Initial Development + Integration Cost |

|---|---|---|

| Server Layer (AWS EC2) | $150 | $4,000 |

| Storage Layer (AWS S3 + TrueNAS) | $75 | $3,000 |

| Database Layer (AWS RDS + TrueNAS) | $150 | $4,500 |

| Security/Encryption Layer (AWS KMS + TrueNAS Encryption) | $50 | $2,000 |

| Integration Layer (AWS Lambda + K8s) | $250 | $5,000 |

| Software Layer (Appsmith + Java Apps on EC2) | $200 + Development Cost | $7,500 (Development) + $3,000 (Integration) |

| SaaS Layer (MRPeasy) | €79 (~$85) | $500 |

| SaaS Layer (HubSpot) | €200 (~$210) | $500 |

AWS Only approach:

| Technology Stack Layer | Overall Monthly Cost | Initial Development + Integration Cost |

|---|---|---|

| Server Layer (AWS EC2) | $150 | $5,000 |

| Storage Layer (AWS S3) | $75 | $3,000 |

| Database Layer (AWS RDS) | $150 | $4,000 |

| Security/Encryption Layer (AWS KMS) | $50 | $2,000 |

| Integration Layer (AWS Glue + K8s) | $250 | $6,000 |

| Software Layer (Appsmith + Java Apps on EC2) | $200 + Development Cost | $8,000 (Development) + $4,000 (Integration) |

| AWS SageMaker (Predictive Stock Analysis) | $79 | $1,500 |

Overall Comparison

| Approach | Total Monthly Cost (Approx) | Initial Development + Integration Costs (Approx) | Risk Mitigation | Efficiency | Implementation Time | UX & Quality of Life |

|---|---|---|---|---|---|---|

| Hybrid | $1,170 – $1,270 | $29,000 | 8/10 | 9/10 | 7/10 | 8/10 |

| AWS-Only | $950 – $1,150 | $33,500 | 6/10 | 9/10 | 6/10 | 7/10 |

In conclusion, the scores and costs between the hybrid and AWS-only approaches are quite closely tied. However, the risk mitigation provided by the hybrid approach, especially for securing sensitive DAO files, makes it the preferred choice. Additionally, the hybrid solution allows for reduced implementation time and the use of shared UI capabilities across specialized SaaS platforms like HubSpot and MRPEasy, which may enhance user experiences and favor business expansion features more effectively. The ability to combine the best of on-premise control with cloud scalability positions the hybrid approach as the more reliable solution for meeting the company’s long-term needs.

4.4 Architecture schema

The hybrid architecture combines on-premise servers with AWS cloud infrastructure to achieve both local compliance and global scalability. This approach follows best practices as outlined by AWS for building hybrid architectures, offering centralized control and data flexibility. Sensitive data, such as DAO files, are managed locally with TrueNAS, while less critical operations leverage AWS services to ensure scalability and cost efficiency. For further insights into AWS hybrid architecture design, you can visit this link: AWS Hybrid Architecture Guide.

4.5 Migration Strategy

The Strangler Pattern is an effective strategy for migrating from legacy systems to a modern AWS + SaaS hybrid architecture. By moving each service one at a time, it ensures minimal disruption, lower risk, and continuous operations during the migration process. Here’s how to apply it to the regional car parts manufacturer case:

- Sales and Customer Management:

- Start by migrating the sales and customer management services. Transition data from the old ERP system to HubSpot CRM, which will handle leads and customer interactions, and store related data securely in AWS RDS.

- Use Appsmith to create intuitive internal dashboards for the sales team, allowing them to access customer information and track leads. AWS Lambda will help keep the data in sync.

- To facilitate this migration, you may need to generate a few CSV files from existing Excel or Access databases. These CSV files can be imported into HubSpot and Appsmith to initialize the customer and sales data in the new system, ensuring smooth onboarding.

- DAO Management and Design Team:

- Next, transition the DAO management and design processes. The sensitive DAO files will be stored locally on TrueNAS for encryption, and non-sensitive backups will be synced to AWS S3.

- The computing power needed for DAO processing will be handled by AWS EC2, and Appsmith will provide a customized user interface for designers to easily access and collaborate on these files. Again, AWS Lambda will facilitate data synchronization between the local and cloud environments.

- During this migration phase, it’s likely you’ll also need to export initial data from current systems to ensure continuity for the design and engineering teams.

- Remaining Components (Production, Stocking, Financials):

- Finally, move production, stocking, and financial processes to the new architecture.

- Use MRPeasy to handle stock planning and production, supported by AWS Lambda for real-time synchronization. Production workflows can be orchestrated using Kubernetes (K8s), providing efficient container management for newly deployed services.

- AWS RDS will be used for financial data, and internal application interfaces for production and financial processes will be managed through Appsmith.

The Strangler Pattern allows a gradual and structured migration from the old ERP system, replacing it piece by piece with the new architecture while ensuring smooth, ongoing business operations. By starting with CSV exports from legacy Excel or Access files, this approach makes sure the initial data is available and ready for use in the new services, making the transition as seamless as possible. The migration begins with the sales and customer data (the least sensitive), then moves to critical design processes, and finally migrates the remaining components once the rest of the architecture is well-established and tested

5. AWS Security and IAM Best Practices

AWS Security is fundamental to ensuring that sensitive business data, such as DAO files and customer information, remains protected from both external and internal threats. AWS follows the Shared Responsibility Model for security, where AWS handles the security “of” the cloud infrastructure, and the user is responsible for securing their data “in” the cloud. Here’s how it applies to the current setup:

AWS IAM and Security

AWS Identity and Access Management (IAM) is the core service for managing user access and controlling permissions. It’s critical to implement IAM roles, policies, and multi-factor authentication (MFA) to tightly control access to cloud resources. IAM ensures that only authorized users can perform specific actions, minimizing the risk of data breaches or unauthorized access.

- IAM Roles: Use IAM roles to define the permissions that applications and services need to interact with AWS. For example, AWS Lambda requires roles to read from RDS or write to S3.

- Policies: Create fine-grained policies to ensure the least privilege is granted to each role, meaning users only have access to the data they need for their specific tasks.

- MFA: Adding multi-factor authentication adds another layer of security, protecting sensitive data by requiring an additional form of identification beyond just a password.

SEO Tip: Incorporate keywords like “AWS IAM best practices” and “secure AWS solutions” to improve search visibility.

Encryption Best Practices

Encryption is a crucial component of the AWS security strategy. AWS provides several types of encryption—server-side encryption and client-side encryption—to secure data in S3 and RDS.

- AWS S3 Encryption: AWS offers server-side encryption for S3, including SSE-S3 (AWS managed keys) and SSE-KMS (AWS Key Management Service), where keys can be managed by AWS or users. This ensures data remains encrypted at rest, making it inaccessible without the proper decryption keys.

- Client-Side Encryption: To take security further, sensitive files such as DAO files are encrypted client-side before they are uploaded to the cloud. This means data is already encrypted before it leaves your local servers, ensuring that AWS is never exposed to the raw unencrypted data. This is the solution we adopted in our hybrid approach for a maximum security around DAO files

- AWS RDS Encryption: RDS instances can also be encrypted at rest using AWS KMS, securing both the data and its automated backups, snapshots, and replicas. This ensures that even if physical security is compromised, the data remains inaccessible without the proper decryption keys.

Shared Responsibility Model

In the Shared Responsibility Model:

- AWS is responsible for securing the infrastructure, including compute, storage, networking, and database services. This includes physical security of data centers and protection against external threats.

- The customer (you) is responsible for securing data within the services. This involves configuring IAM properly, encrypting sensitive data, setting up firewalls, and monitoring access.

By implementing these security practices, this architecture benefits from AWS’s robust external security measures, while the company takes responsibility for securing data at rest and in transit. This includes managing encryption keys, enforcing access control through IAM, and configuring firewalls for EC2 instances—all aimed at ensuring that both external threats and internal risks are mitigated effectively.

6. Practical Migration Example: Java Mainframe to AWS + SaaS

This example will demonstrate migrating from a legacy Java Mainframe to a modern AWS microservices + SaaS solution for a regional car manufacturer. This migration aims to modernize and optimize operations for improved efficiency, scalability, and data security.

Step 1: Migrating Java Mainframe Processes

The migration begins with breaking down the legacy Java mainframe monolithic system into smaller, more manageable AWS microservices. This approach helps to modernize processes in a phased manner, improving scalability and enabling more agile business operations.

- Data Extraction:

- Extract critical data, including customer, sales, and stock data from the Java mainframe system. This data can be exported as CSV files and imported into Amazon RDS for easy access.

- These CSV files will also help initialize HubSpot and Appsmith by providing existing customer and order data.

Step 2: Syncing Data to MRPeasy Using AWS Lambda

AWS Lambda will be used to keep the stock data in sync with MRPeasy. This ensures that inventory data remains updated, reducing discrepancies between systems.

Example AWS Lambda Code for Syncing Stock Data:

import json

import requests

import boto3

# Setup MRPeasy API endpoint and credentials

MRPEASY_API_URL = "<https://api.mrpeasy.com/v1/stocks/>"

MRPEASY_API_KEY = "YOUR_MRPEASY_API_KEY"

# AWS setup

s3_client = boto3.client('s3')

def lambda_handler(event, context):

try:

# Extract stock data from Amazon RDS or S3 bucket

bucket_name = "your-s3-bucket-name"

key = "path/to/stock_data.json"

response = s3_client.get_object(Bucket=bucket_name, Key=key)

stock_data = json.loads(response['Body'].read())

# Iterate through stock data and sync with MRPeasy

for stock in stock_data:

payload = {

"item_id": stock["item_id"],

"quantity": stock["quantity"],

"location": stock["location"]

}

headers = {

"Authorization": f"Bearer {MRPEASY_API_KEY}",

"Content-Type": "application/json"

}

mrp_response = requests.post(MRPEASY_API_URL, headers=headers, json=payload)

if mrp_response.status_code != 200:

raise Exception(f"Failed to sync stock data: {mrp_response.content}")

return {

'statusCode': 200,

'body': json.dumps('Successfully synced stock data with MRPeasy')

}

except Exception as e:

print(e)

return {

'statusCode': 500,

'body': json.dumps('Error in syncing stock data')

}

Registering a Cron Job for AWS Lambda Sync

To ensure data consistency, a cron job can be registered to run the AWS Lambda function every hour, syncing stock data between MRPeasy and AWS.

Registering the Cron Job:

- You can set up an AWS CloudWatch Events rule to trigger the Lambda function at regular intervals (e.g., hourly).

Example AWS CloudWatch Events Rule:

- Navigate to AWS CloudWatch in the AWS console.

- Go to Events -> Rules.

- Create a new rule, and under Event Source, choose Schedule and specify

rate(1 hour)to run it hourly. - Set the target to your AWS Lambda function.

This setup will ensure hourly syncs between AWS and MRPeasy, maintaining consistent stock data.

Step 3: Designing and Deploying Appsmith for Internal Applications

Appsmith provides an easy-to-use platform to develop CRM dashboards and other internal applications quickly. Here’s how to deploy and utilize Appsmith for the new AWS-based architecture:

- Deployment:

- Appsmith is deployed on a Kubernetes (K8s) cluster hosted on AWS EC2 instances. This allows for containerized deployment, providing easy scalability and redundancy.

- Amazon RDS stores all application data, including configuration and user information.

- Internal Apps:

- Create internal dashboards using Appsmith’s drag-and-drop interface for managing DAO files, stock tracking, and production management. Appsmith’s REST API capabilities make it easy to connect to other cloud services like AWS RDS, HubSpot, and MRPeasy.

Step 4: Secure Backups of DAO Files to S3

The DAO files must be securely backed up from TrueNAS to AWS S3 for redundancy. Below is a shell script to encrypt files and back them up to S3.

Example Script for Backing Up DAO Files:

#!/bin/bash

# Paths

SOURCE_DIR="/path/to/dao_files"

BACKUP_DIR="/path/to/encrypted_backup"

S3_BUCKET="your-s3-bucket-name"

S3_FOLDER="dao_backups"

# Encrypt the DAO files

tar -czvf $BACKUP_DIR/dao_files_$(date +%Y%m%d%H%M).tar.gz $SOURCE_DIR

# Upload the encrypted file to S3

aws s3 cp $BACKUP_DIR/dao_files_$(date +%Y%m%d%H%M).tar.gz s3://$S3_BUCKET/$S3_FOLDER/

Registering a Cron Job for DAO File Backup:

- Register a cron job on the TrueNAS server to run this backup script hourly:

0 * * * * /path/to/backup_script.sh

This cron job will run every hour (0 * * * *) to ensure DAO files are regularly encrypted and backed up to AWS S3 for secure and redundant storage.

This migration strategy uses the Strangler Pattern to move the entire system incrementally. By starting with sales and customer data and moving to design processes, and finally to production and stock management, the transition minimizes the risk of disruptions. The use of AWS Lambda for synchronization, Appsmith for UI management, and AWS S3 for secure cloud backups ensures that all data is handled efficiently and securely, while the existing infrastructure is gradually replaced.

7. Conclusion

In this case study, we’ve examined the journey of transitioning a regional car parts manufacturer from a legacy Java mainframe system to a hybrid AWS and SaaS solution. The approach leveraged AWS infrastructure like EC2, RDS, S3, as well as Appsmith, MRPeasy, and TrueNAS to create a secure, scalable, and modern architecture.

The key takeaways are:

- Cost Reduction: By moving critical infrastructure to the cloud and integrating it with SaaS tools, the solution reduces on-premises hardware and maintenance costs.

- Enhanced Security: The combination of local storage (TrueNAS) for sensitive data and AWS security features ensures high levels of protection while benefiting from cloud scalability.

- Improved Workflow Efficiency: Tools like Appsmith simplify the creation of internal apps, while AWS Lambda automates data sync, resulting in a more efficient and connected workflow.

Even writing this article, I found that significantly more time was needed to design the solution and study possible requirements and components than it would take to actually implement the coding phase. This serves as a reminder that, in real-world projects, careful preliminary analysis and thorough information gathering are crucial for a smooth and successful transformation. Properly investing time in designing and understanding all requirements upfront helps ensure a minimally disruptive transition while maximizing success.

If you’re considering taking the next step toward digital transformation, explore AWS solutions with a free trial or consult AWS experts to get tailored migration advice.